Bringing LEGO minifigures to life using AR and emotion recognition

Wednesday, May 3, 2017

|

Richard Harris |

A Q/A with Mobile LEGO Augmented Reality app creator Goran Vuksic

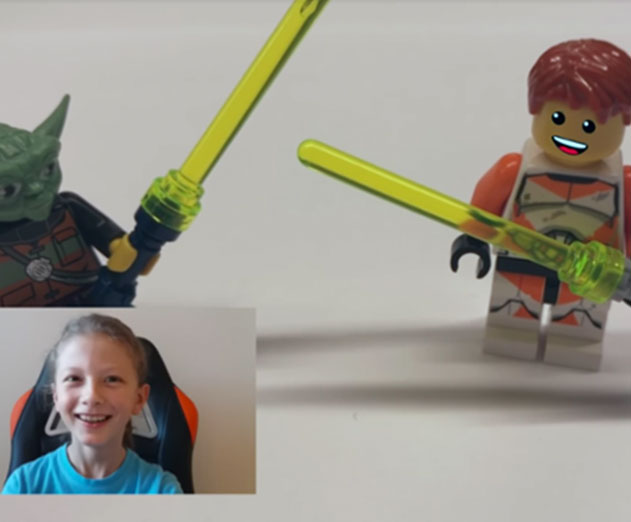

Goran Vuksic, an iOS developer for Tattoodo, has made a unique new application that is helping make childhood dreams come alive. The app uses emotional AI and Augmented Reality SDK's that help transform miniature LEGO characters into interactive models. The project responds to a user's facial features by analyzing and projecting the user's current mood onto the LEGO character's face in real-time and in a Augmented Reality setting - using your cameras front and back facing cameras. To learn more about this fun new app, we sat down with it's maker to learn more about how he created the project.

Vuksic: Using emotion recognition technology in combination with augmented reality, I built an iOS app to “give life” to LEGO minifigures. Leveraging Affectiva and Vuforia SDKs, the app recognizes the emotions of users playing with LEGO toys, and then renders their emotions onto the LEGO faces in augmented reality.

I was inspired to create this project for a couple of reasons. For one, I’m very interested in augmented reality, and often create augmented reality applications in my free time. I also have a 9 year old son, and I wanted to create something to entertain him. He plays with LEGO toys a lot, and I noticed that if you turn the LEGO minifigure’s head 180 degrees, the yellow face appears blank. I thought it would be fun to build something that would make the LEGO more interactive, by using emotion recognition technology to make the toy’s facial expression reflect the user’s in real-time.

Vuksic: Emotion recognition and specifically Emotion artificial intelligence (AI) played a key role in the development of this project, and it probably wouldn't be as much fun to use if I made it some other way. Emotion recognition technology creates a more interactive and personalized experience, making it more fun than if the facial expressions were merely rendered in augmented reality, unrelated to the user’s own emotions. My family had a lot of fun testing the application - when you see how the LEGO minifigures mimic your emotions, you have to laugh.

Vuksic: I decided to use Unity3D, as it’s a great and easy-to-use game engine, especially if you want to develop quick prototypes. I started by creating recognition patterns for several LEGO minifigures that the Vuforia SDK could recognize in augmented reality. Then, introducing Affectiva’s SDK enabled the software to recognize user emotions, in order to know which facial expression should be displayed on the minifigure.

Vuksic: There are already a lot of talking toys, interactive games, and the like, but I haven't seen any similar uses of augmented reality so far. Hopefully this project will inspire someone to make this kind of application. In the future, I believe we'll see more and more interactive toys that will be aware of the user, and be able to tailor the experience of play in real-time. Such toys will be able to respond not only to buttons or voice commands, but also to user emotions.

Vuksic: Augmented reality is more and more present in different applications. With use of augmented reality, developers can add a lot of information to the world around us, creating more personalized experiences across industries. But in order for applications to truly interact with users, they should be aware of users’ emotions. I believe that combination of those two technologies can have many interesting use cases.

Beyond toys, educational applications are another example of apps that could benefit from using AR and Emotion AI. For example, imagine that you’re using an app to learn about the human body. Augmented reality could be used to let you explore models of organs in 3D. At the same time, if the application also had Emotion AI, it could track your engagement with the education materials, to offer more information about the details you’re focused on, or generate a test to check your knowledge on aspects where you were not paying enough attention.

Vuksic: With emotion AI, developers can better understand app users’ reactions and emotional responses, and use that information to enhance the user experience. Applications often use some tracking systems to monitor user behavior, such as what a user clicking on within an application. Based on that, developers can try to piece together an overall idea of user behavior and experience, but without considering data on the user’s emotions, they can't really say how engaged a user was. For example, did a user laugh at the content on the app and find it funny, or did they express anger or concern, prompting them to delete the app? Using Emotion AI to gain these kinds of insights can be very helpful for developers to improve their applications and the user experience.

On the other hand, end-users can benefit from emotion-aware applications, as these applications will better understand their behavior and desired experience. For example, news applications today can offer similar posts to read based on what a user has clicked on thus far. By adding Emotion AI into news applications, the recommendations could be even more targeted, by measuring how engaged users were with specific kinds of content and recommending other articles based on those that the users showed the most interest in.

Vuksic: Both augmented reality and Emotion AI have the potential to be very interesting for app users and developers, and by combining them, there are even more possibilities to create interesting and engaging projects. My advice for developers would be to feel free to explore, and empower your apps with emotion AI in order to understand your users better. And when appropriate, take advantage of augmented reality to make the experience that much more immersive and realistic.

ADM: Tell us a bit about your project with LEGO minifigures. What is the experience that you have created? What piqued your interest in emotion-enabled toys?

Vuksic: Using emotion recognition technology in combination with augmented reality, I built an iOS app to “give life” to LEGO minifigures. Leveraging Affectiva and Vuforia SDKs, the app recognizes the emotions of users playing with LEGO toys, and then renders their emotions onto the LEGO faces in augmented reality.

I was inspired to create this project for a couple of reasons. For one, I’m very interested in augmented reality, and often create augmented reality applications in my free time. I also have a 9 year old son, and I wanted to create something to entertain him. He plays with LEGO toys a lot, and I noticed that if you turn the LEGO minifigure’s head 180 degrees, the yellow face appears blank. I thought it would be fun to build something that would make the LEGO more interactive, by using emotion recognition technology to make the toy’s facial expression reflect the user’s in real-time.

ADM: Why did you choose emotion recognition technology to support the experience? Did you consider any other approaches?

Vuksic: Emotion recognition and specifically Emotion artificial intelligence (AI) played a key role in the development of this project, and it probably wouldn't be as much fun to use if I made it some other way. Emotion recognition technology creates a more interactive and personalized experience, making it more fun than if the facial expressions were merely rendered in augmented reality, unrelated to the user’s own emotions. My family had a lot of fun testing the application - when you see how the LEGO minifigures mimic your emotions, you have to laugh.

ADM: What was the process of developing your project, and bringing LEGO minifigures to life? How did you use Unity3D, Vuforia SDK, and Affectiva’s SDK?

Vuksic: I decided to use Unity3D, as it’s a great and easy-to-use game engine, especially if you want to develop quick prototypes. I started by creating recognition patterns for several LEGO minifigures that the Vuforia SDK could recognize in augmented reality. Then, introducing Affectiva’s SDK enabled the software to recognize user emotions, in order to know which facial expression should be displayed on the minifigure.

ADM: What do you envision for the future of emotion-enabled toys and applications?

Vuksic: There are already a lot of talking toys, interactive games, and the like, but I haven't seen any similar uses of augmented reality so far. Hopefully this project will inspire someone to make this kind of application. In the future, I believe we'll see more and more interactive toys that will be aware of the user, and be able to tailor the experience of play in real-time. Such toys will be able to respond not only to buttons or voice commands, but also to user emotions.

ADM: As a developer, how do you think emerging technologies like augmented reality and Emotion AI will impact the way apps are created? And beyond toys, what other kinds of applications do you think could benefit from leveraging AR and Emotion AI?

Vuksic: Augmented reality is more and more present in different applications. With use of augmented reality, developers can add a lot of information to the world around us, creating more personalized experiences across industries. But in order for applications to truly interact with users, they should be aware of users’ emotions. I believe that combination of those two technologies can have many interesting use cases.

Beyond toys, educational applications are another example of apps that could benefit from using AR and Emotion AI. For example, imagine that you’re using an app to learn about the human body. Augmented reality could be used to let you explore models of organs in 3D. At the same time, if the application also had Emotion AI, it could track your engagement with the education materials, to offer more information about the details you’re focused on, or generate a test to check your knowledge on aspects where you were not paying enough attention.

Goran Vuksic, iOS developer at Tattoodo

ADM: What are the benefits of using emotion AI for developers? For end-users?

Vuksic: With emotion AI, developers can better understand app users’ reactions and emotional responses, and use that information to enhance the user experience. Applications often use some tracking systems to monitor user behavior, such as what a user clicking on within an application. Based on that, developers can try to piece together an overall idea of user behavior and experience, but without considering data on the user’s emotions, they can't really say how engaged a user was. For example, did a user laugh at the content on the app and find it funny, or did they express anger or concern, prompting them to delete the app? Using Emotion AI to gain these kinds of insights can be very helpful for developers to improve their applications and the user experience.

On the other hand, end-users can benefit from emotion-aware applications, as these applications will better understand their behavior and desired experience. For example, news applications today can offer similar posts to read based on what a user has clicked on thus far. By adding Emotion AI into news applications, the recommendations could be even more targeted, by measuring how engaged users were with specific kinds of content and recommending other articles based on those that the users showed the most interest in.

ADM: What advice would you give to other developers interested in integrating Emotion AI or AR into their projects?

Vuksic: Both augmented reality and Emotion AI have the potential to be very interesting for app users and developers, and by combining them, there are even more possibilities to create interesting and engaging projects. My advice for developers would be to feel free to explore, and empower your apps with emotion AI in order to understand your users better. And when appropriate, take advantage of augmented reality to make the experience that much more immersive and realistic.

Become a subscriber of App Developer Magazine for just $5.99 a month and take advantage of all these perks.

MEMBERS GET ACCESS TO

- - Exclusive content from leaders in the industry

- - Q&A articles from industry leaders

- - Tips and tricks from the most successful developers weekly

- - Monthly issues, including all 90+ back-issues since 2012

- - Event discounts and early-bird signups

- - Gain insight from top achievers in the app store

- - Learn what tools to use, what SDK's to use, and more

Subscribe here